Uncategorized

Swiping To The Answer

Gotta Go Fast: Image Based Comparisons For Speedrunning

LS: My Time Is Now

LS: We’re Going to PAX!

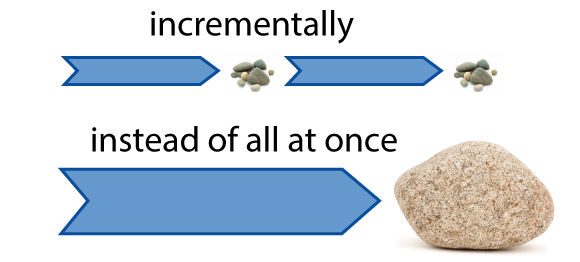

LS: The C++ is dead, Long Live the C++

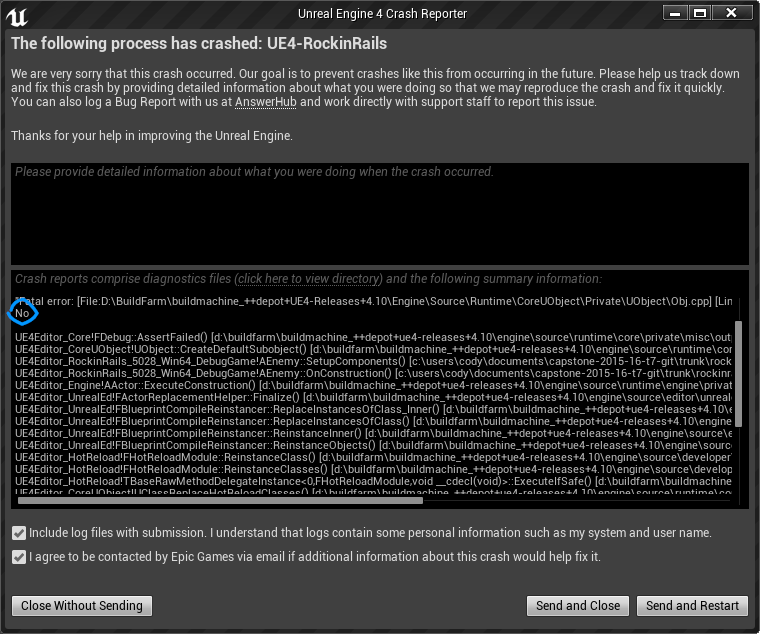

LS: Dr. Unreal or: How I Learned to Love the Crash

LS: How To Fake Being a Rock God

Team SAOS: The Root of the Problem

Team NAH: We’re Going To Woodstock!